The cryptocurrency landscape recently intersected with the world of artificial intelligence (AI) in a significant way, following the launch of DeepSeek-R1. This open-source reasoning model has generated buzz by matching the performance of leading AI models while utilizing a notably low training budget and innovative training techniques. By challenging the conventional wisdom of model scaling—which typically demands extensive resources—DeepSeek-R1 emphasizes the emerging capabilities within the reasoning domain of AI, a focal point for developers and researchers alike.

What makes DeepSeek-R1 particularly intriguing is its accessibility. Unlike traditional closed-source models, its open-weights architecture has allowed the AI community to rapidly adopt and create a plethora of clones just hours after its release. This has sparked discussions on the quality of AI models from China, as DeepSeek-R1 reinforces the narrative that Chinese-developed models are not only competitive but capable of being at the forefront of innovation.

“The introduction of DeepSeek-R1 marks an important milestone in generative AI and could potentially reshape how foundation models are approached and developed.”

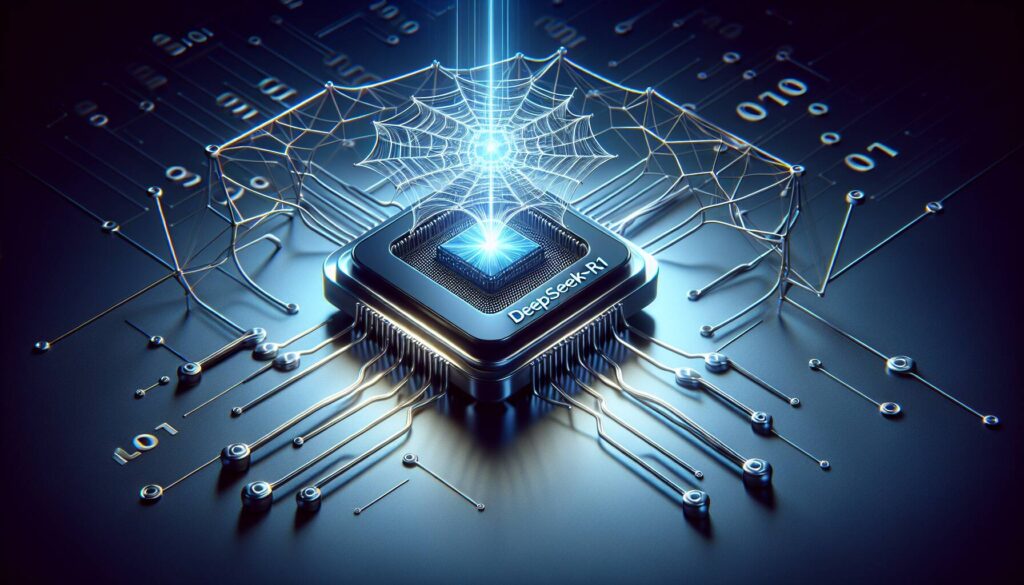

Diving into its design, DeepSeek-R1 evolved from its predecessor, DeepSeek-v3-base, leveraging a base of 617 billion parameters. This model underwent a series of training methodologies that included pretraining, supervised fine-tuning, and an alignment phase to human preferences. Key to its creation was an intermediate model, R1-Zero, which was developed using reinforcement learning and specialized in reasoning tasks. The innovative approach not only demonstrated cutting-edge reasoning capabilities but also highlighted the successful integration of synthetic data for model training—a move that aligns well with decentralized computing environments.

The implications of DeepSeek-R1 extend beyond pure AI development; they suggest intriguing potentials for integration within Web3 technologies. The enhanced reasoning capabilities, reliance on reinforcement learning, and emphasis on data provenance are properties that resonate with the decentralized principles of Web3. This convergence presents a unique opportunity to address existing gaps within the AI field and leverage decentralized networks for improved reasoning models and dataset generation.

Key Points on the Release of DeepSeek-R1 and Its Impact on AI and Web3

The release of DeepSeek-R1 has created ripples across the AI community and beyond. Here are the pivotal aspects of this advancement:

- DeepSeek-R1 Overview

- An open-source reasoning model challenging traditional training paradigms.

- Matches the performance of leading models with significantly lower training budgets.

- Reinforces the competitive landscape between China and the U.S. in AI innovation.

- Technical Innovations

- Utilized an incremental approach, building upon the 617 billion parameters from DeepSeek-v3-base.

- Combined supervised fine-tuning (SFT) with unique reasoning datasets.

- Produced two models: R1-Zero (specialized in reasoning via reinforcement learning) and DeepSeek-R1 (general-purpose).

- Web3 Implications

- Reinforcement learning can be parallelized, aligning well with decentralized networks.

- Synthetic reasoning datasets can be generated in a decentralized manner, ensuring efficiency and cost-effectiveness.

- Small distilled reasoning models pose a potential for practical deployment in decentralized applications.

- Increased focus on reasoning data provenance may enhance transparency in AI outputs.

- Future of Web3-AI

- DeepSeek-R1’s innovations indicate a shift toward more integrated AI models that operate well in decentralized environments.

- This model may facilitate meaningful use cases for Web3, boosting its relevance in the evolving AI landscape.

- Potential for a “new internet of reasoning” driven by traceability and verification.

The advancements introduced with DeepSeek-R1 signify a potential turning point for both AI development and the role of Web3 technologies, offering intriguing opportunities for innovation and integration.

DeepSeek-R1: A Game Changer in the AI and Web3 Landscape

The recent launch of DeepSeek-R1 has sent ripples through the artificial intelligence arena, disrupting conventional narratives about foundation models. Its open-weights approach and innovative training methodologies have led to instant accessibility and a proliferation of derivative clones. The significance of this development becomes even clearer when we explore how DeepSeek-R1 holds its ground against other trending advancements in the AI space.

Competitive Advantages: One of the primary advantages of DeepSeek-R1 is its ability to achieve high performance with a minimal training budget, challenging the belief that larger models require heftier financial investments. This could democratize access to cutting-edge AI, particularly for smaller organizations or startups that often struggle with resource constraints. Additionally, the synthetic reasoning datasets generated by the intermediate model, R1-Zero, illustrate an intriguing trend toward more cost-effective and efficient AI training processes. Another vital point is that R1 doesn’t just stop at producing models in isolation; it opens the floor for decentralized inference via Web3 integrations. This creates opportunities for various stakeholders to participate in model training and fine-tuning, fostering a more collaborative approach to AI development.

Competitive Disadvantages: However, its weaknesses are equally notable. For instance, R1-Zero’s specialization in reasoning tasks comes at the expense of versatility in general tasks such as question-answering. Organizations looking for a multipurpose model may find DeepSeek-R1 falling short in certain applications. Moreover, the saturation of clones immediately following the release can muddy the waters, creating an environment where distinguishing between genuine advancements and mere imitations becomes a challenge for developers and organizations alike.

This news is particularly beneficial for research institutions and tech startups that thrive on innovation and creative AI solutions. These entities might leverage the accessibility and cost-efficiency of R1 to pioneer new applications while remaining relatively nimble compared to larger corporations. Conversely, established tech giants like Google and OpenAI may find themselves in a precarious position, as the rapid emergence of DeepSeek-R1 clones could erode their competitive edge, particularly in the reasoning domain. The threat of diluted brand credibility is real, as their reliance on datasets and foundational models becomes less distinctive in an ever-crowded field.

DeepSeek-R1 isn’t merely an isolated achievement; it raises fundamental questions about the future of AI’s competitive landscape, particularly as it aligns closely with Web3 principles. The intersection of AI and decentralized networks might just reshape not only how models are trained but also who gets to innovate in this space.